Organization

University of Central Florida — College of Engineering and Computer Science

About

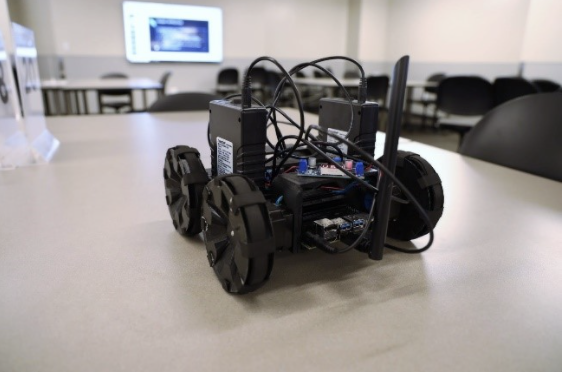

The RE-RASSOR Project is a joint FSI, Florida Space Grant and NASA project to develop, mature and extend the EZ-RASSOR Software and the Mini-RASSOR Hardware. The goals include: ready to use rover systems and building blocks for Research projects; extensions and enhancements to those systems; and meaningful experiences and systems to support advance STEM / STEAM education. Production ready systems, components and open-source hardware and software are all to be available.

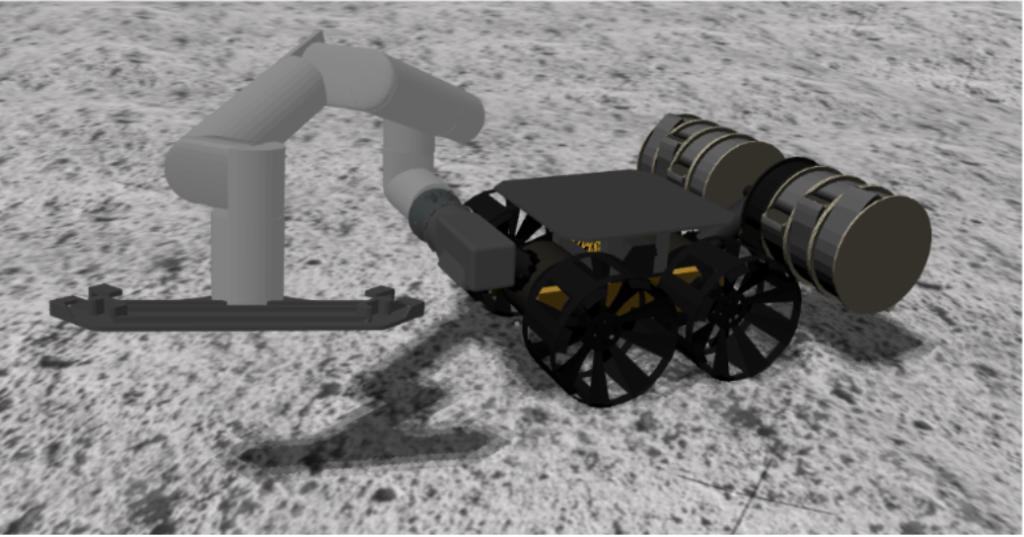

The Arm Autonomous Operations team created software to work with a cooperative group of RE-RASSOR Robots to build a landing pad or road on the surface of the moon. The Arm software used visual information to precisely place Kennedy Space Center informed pavers in support of landing pad construction.

Pipeline

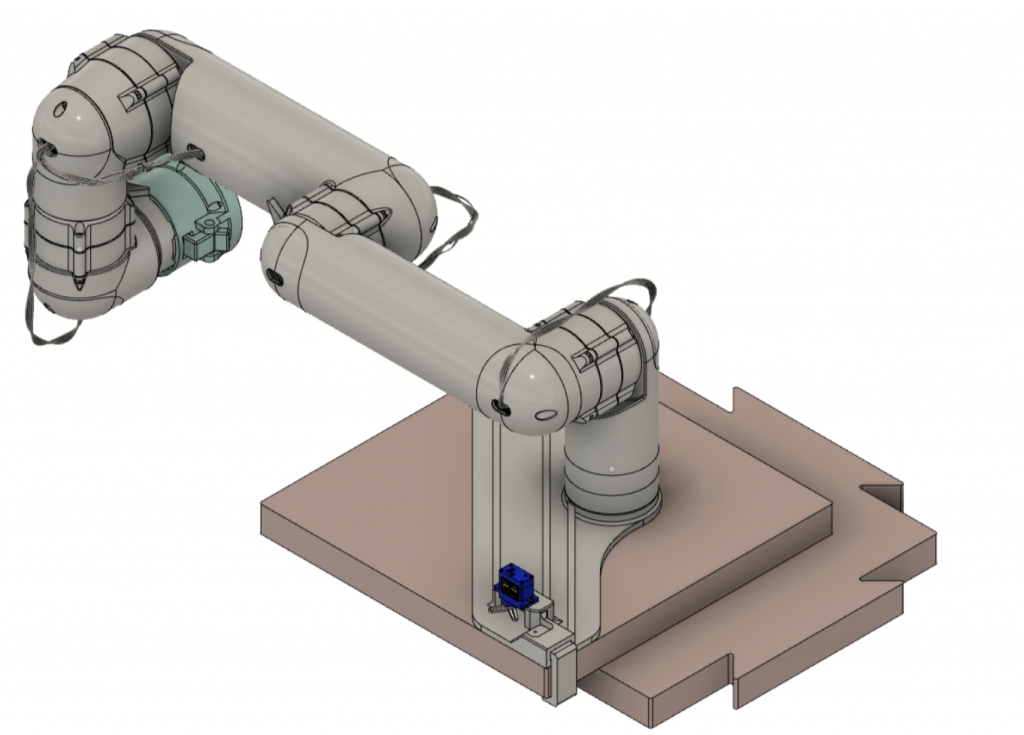

To accomplish this goal we first created a simulation solution within the simulation software gazebo. This involved adding the arm model/drivers to the existing rover model as well as adding the paver model to the simulation’s model database. Once the arm model was integrated we added a camera to the rover next to the arm to fuel a visual network with images. This visual network takes images before placement of the next paver to determine the previous paver’s position in cartesian space in the form of a 3D bounding box. The center of the previous paver’s3D bounding box is sent to the arm’s autonomous controller which is created using the moveit ROS package. This controller uses inverse kinematics along with the information from the visual network to direct the arm to the proper location for placement. Finally our modified driving algorithm, that uses the existing autonomous driving functions of the rover, is used to return to the rover’s spawn to generate a new paver and then moving to the next location for paver placement. We also added arm functionality to the existing gamepad mobile app which connects to the simulation/real-world rover through a local http server; this gamepad functionality was added primarily as a tool for the learning aspect of this project as it would allow for students to easily interact with the arm in a more personal manner.

Technologies

- ROS – The Robot Operating System (ROS) is a framework for interacting with and controlling robotic components both for real-world and simulation

- Gazebo – A simulation software that is used to generate a simulated environment and contains many useful tools for interacting with ROS

- Blender – A modeling software used to create the meshes for the arm model that was added to gazebo as well as for generating training images for our first version of the visual network

- Tensorflow – A python library that facilitates the creation/use of deep neural networks and was used for object detection in our visual network

- OpenCV – A python library that facilitates the interaction/conversion of images

- React Native – React js library for developing mobile applications and is similar to the normal React js library for web development

- Moveit – ROS package for creating controllers for robotic manipulators such as our arm by using the universal robot description format (URDF) of the arm to map a kinematics controller to

Project Sponsors

Further Acknowledgements

Mark Heinrich – UCF

Project Team

Robert Forristall (Project Manager, ROS Control/Integration, Autonomous/Manual ArmControl): robert.forristall@knights.ucf.edu

Luca Gigliobianco (Arduino Software Development, Jetson Nano Integration): lucagglbnc@knights.ucf.edu

Cooper Urich (React Native Developer): Cooperurich@knights.ucf.edu

Christopher Jackson (Visual Network): cjackson0416@knights.ucf.edu

Austin Dunlop (Simulation): austin.d@knights.ucf.edu